So I work in multiple M365 Tenants, and even after years of uservoice feedback, and more complaints, Teams is dead awful at working between two tenants. And only gets worse if you ever decide to try guest accounts in Azure which really just add MORE accounts to switch between..

That said, I work in primarily two Tenants and was recently going over with some of my team how I manage both. So here goes.

First some short pointers.

- Choose your primary tenant. This is the one you sign into the desktop apps with.

- Chrome based Edge with signed in profiles can help this effort.

- Signing into the Azure tenant on your windows machine via ADAL can also ease the pain.

Sidenote. Using ADAL, where you sign into Azure via Windows is probably not required. It can make things a bit easier by allowing edge to leverage ADAL when you HAVE to switch between profiles because you are already signed in.

With that said, I have seen issues with setting up a secondary synced profile in Edge that isn't first attached via ADAL IF your first profile IS synced via ADAL. So if you run into issues, setting up the "work account" in Windows settings can help this.

Once you have a primary selected and signed in, you should also sign into Edge and sync the profile with your M365 account. It does make moving machines and upgrades easier as well and since this is typically a work use case, privacy concerns here basically go out the windows.

Once the intial setup is done its now time to setup your secondary account. The basic steps here are to:

- Sign into a secondary profile in Edge and sync it with your other tenant.

- Enable automatic profile switching

- Configure the default profile for external links to use your primary profle. This will make sure any links clicked in places like Outlook will open in your primary profile

- Go to profile preferences for sites and add any overrides for your secondary tenant. Some often used ones include shortname-my.sharepoint.com for OneDrive links etc. Anything that uses the secondary M365 tenant can go here.

So now any links going to your secondary Tenant should be opening in a profile that is also using cached credentials and signed into said tenant. This will avoid things like this a warning that is thrown in a browser session if you switch accounts in another tab. And whats worse is if you hit "refresh" you will pretty much just get dumped to a generic landing page in whatever tenant you are signed into.

Now for the teams part. Edge has an ability to make any website an "App" in windows. Its basically just edge in some hybrid kiosk mode, but it makes the application launchable from the start menu and pins it to your taskbar.

So open your secondary profile in Edge, and head to teams.microsoft.com and login. Then hit your ... menu and go to Apps and "Install this site as an app."

Click through the confirmation and select whether you want this to auto-start, pin to the desktop etc and hit Allow.

If you ever want to remove this as well, you can simply right click on the app in the start menu and hit Uninstall

The next change worth making is going back to your Edge browser version of teams and clicking the security lock and changing the permissions to the following. This will allow you to join meetings and such without any prompts to allow the mic etc.

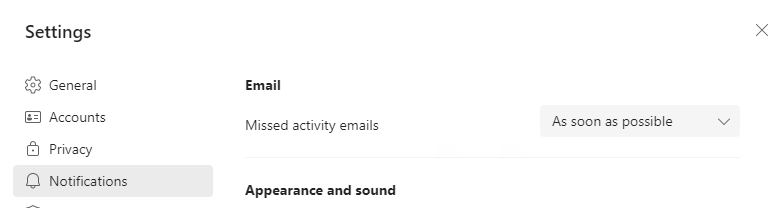

One last thing I do is modify my notification settings in any Teams tenant that isnt my primary and set the missed activity emails to ASAP. This ensures I dont miss any messages within reason as I should also see them in email.

And Voila, I now have two independent versions of Teams on my desktop that I can use in my taskbar etc.